Tutorial¶

Version 3.2.0 for version 1.8 of the CF conventions.

All of the Python code in this tutorial is available in an executable

script (download, 36kB).

Important

This version of cf is for Python 3 only and there are incompatible differences between versions 2.x and 3.x of cf.

Scripts written for version 2.x but running under version 3.x should either work as expected, or provide informative error messages on the new API usage. However, it is advised that the outputs of older scripts are checked when running with Python 3 versions of the cf library.

For version 2.x documentation, see the older releases page.

Sample datasets¶

This tutorial uses a number of small sample datasets, all of which can

be found in the zip file cf_tutorial_files.zip

(download,

164kB):

$ unzip -q cf_tutorial_files.zip

$ ls -1

air_temperature.nc

cf_tutorial_files.zip

contiguous.nc

external.nc

file2.nc

file.nc

gathered.nc

parent.nc

precipitation_flux.nc

timeseries.nc

umfile.pp

vertical.nc

wind_components.nc

The tutorial examples assume that the Python session is being run from the directory that contains the zip file and its unpacked contents, and no other files.

The tutorial files may be also found in the downloads directory of the on-line code repository.

Import¶

The cf package is imported as follows:

>>> import cf

CF version¶

The version of the CF conventions and

the CF data model being used may be found with

the cf.CF function:

>>> cf.CF()

'1.8'

This indicates which version of the CF conventions are represented by this release of the cf package, and therefore the version can not be changed.

Note, however, that datasets of different CF versions may be read from, or written to netCDF.

Field construct¶

The central construct (i.e. element) to CF is the field construct. The field construct, that corresponds to a CF-netCDF data variable, includes all of the metadata to describe it:

descriptive properties that apply to field construct as a whole (e.g. the standard name),

a data array, and

“metadata constructs” that describe the locations of each cell of the data array, and the physical nature of each cell’s datum.

A field construct is stored in a cf.Field instance, and henceforth

the phrase “field construct” will be assumed to mean “cf.Field

instance”.

Reading field constructs from datasets¶

The cf.read function reads files from disk, or from an OPeNDAP URLs 1, and returns the contents in

a cf.FieldList instance that contains zero or more cf.Field

instances, each of which represents a field construct. Henceforth, the

phrase “field list” will be assumed to mean a cf.FieldList instance.

A field list is very much like a Python list,

with the addition of extra methods that operate on its field construct

elements.

The following file types can be read:

All formats of netCDF3 and netCDF4 files (including CFA-netCDF files) can be read, containing datasets for any version of CF up to and including CF-1.8.

Files in CDL format, with or without the data array values.

PP and UM fields files, whose contents are mapped into field constructs.

A future release of cf will include support for netCDF4 files containing data organised in hierarchical groups, but this is not available in version 3.2.0 (even though it is allowed in CF-1.8).

For example, to read the file file.nc (found in the sample

datasets), which contains

two field constructs:

>>> x = cf.read('file.nc')

>>> type(x)

<class 'cf.fieldlist.FieldList'>

>>> len(x)

2

Descriptive properties are always read into memory, but lazy loading is employed for all data arrays, which means that no data is read into memory until the data is required for inspection or to modify the array contents. This maximises the number of field constructs that may be read within a session, and makes the read operation fast.

Multiple files may be read in one command using UNIX wild card characters, or a sequence of file names (each element of which may also contain wild cards). Shell environment variables are also permitted.

>>> y = cf.read('*.nc')

>>> len(y)

14

>>> z = cf.read(['file.nc', 'precipitation_flux.nc'])

>>> len(z)

3

All of the datasets in one more directories may also be read by replacing any file name with a directory name. An attempt will be made to read all files in the directory, which will result in an error if any have a non-supported format. Non-supported files may be ignored with the ignore_read_error keyword.

>>> y = cf.read('$PWD') # Raises Exception

Traceback (most recent call last):

...

Exception: Can't determine format of file cf_tutorial_files.zip

>>> y = cf.read('$PWD', ignore_read_error=True)

>>> len(y)

15

In all cases, the default behaviour is to aggregate the contents of

all input datasets into as few field constructs as possible, and it is

these aggregated field constructs are returned by cf.read. See the

section on aggregation for full details.

The cf.read function has optional parameters to

allow the user to provide files that contain external variables;

request extra field constructs to be created from “metadata” netCDF variables, i.e. those that are referenced from CF-netCDF data variables, but which are not regarded by default as data variables in their own right;

display information and warnings about the mapping of the netCDF file contents to CF data model constructs;

remove from, or include, size one dimensions on the field constructs’ data;

configure the field construct aggregation process;

configure the reading of directories to allow sub-directories to be read recursively, and to allow directories which resolve to symbolic links; and

configure parameters for reading PP and UM fields files.

CF-compliance¶

If the dataset is partially CF-compliant to the extent that it is not

possible to unambiguously map an element of the netCDF dataset to an

element of the CF data model, then a field construct is still

returned, but may be incomplete. This is so that datasets which are

partially conformant may nonetheless be modified in memory and written

to new datasets. Such “structural” non-compliance would occur, for

example, if the “coordinates” attribute of a CF-netCDF data variable

refers to another variable that does not exist, or refers to a

variable that spans a netCDF dimension that does not apply to the data

variable. Other types of non-compliance are not checked, such whether

or not controlled vocabularies have been adhered to. The structural

compliance of the dataset may be checked with the

dataset_compliance method of the field construct, as

well as optionally displayed when the dataset is read.

Inspection¶

The contents of a field construct may be inspected at three different levels of detail.

Minimal detail¶

The built-in repr function, invoked on a variable by calling that variable

alone at the interpreter prompt, returns a short, one-line description:

>>> x = cf.read('file.nc')

>>> x

[<CF Field: specific_humidity(latitude(5), longitude(8)) 1>,

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>]

>>> q = x[0]

>>> t = x[1]

>>> q

<CF Field: specific_humidity(latitude(5), longitude(8)) 1>

This gives the identity of the field construct (e.g. “specific_humidity”), the identities and sizes of the dimensions spanned by the data array (“latitude” and “longitude” with sizes 5 and 8 respectively) and the units of the data (“1”, i.e. dimensionless).

Medium detail¶

The built-in str function, invoked by a print call on a field construct,

returns similar information as the one-line output, along with short

descriptions of the metadata constructs, which include the first and last

values of their data arrays:

>>> print(q)

Field: specific_humidity (ncvar%q)

----------------------------------

Data : specific_humidity(latitude(5), longitude(8)) 1

Cell methods : area: mean

Dimension coords: latitude(5) = [-75.0, ..., 75.0] degrees_north

: longitude(8) = [22.5, ..., 337.5] degrees_east

: time(1) = [2019-01-01 00:00:00]

>>> print(t)

Field: air_temperature (ncvar%ta)

---------------------------------

Data : air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K

Cell methods : grid_latitude(10): grid_longitude(9): mean where land (interval: 0.1 degrees) time(1): maximum

Field ancils : air_temperature standard_error(grid_latitude(10), grid_longitude(9)) = [[0.76, ..., 0.32]] K

Dimension coords: atmosphere_hybrid_height_coordinate(1) = [1.5]

: grid_latitude(10) = [2.2, ..., -1.76] degrees

: grid_longitude(9) = [-4.7, ..., -1.18] degrees

: time(1) = [2019-01-01 00:00:00]

Auxiliary coords: latitude(grid_latitude(10), grid_longitude(9)) = [[53.941, ..., 50.225]] degrees_N

: longitude(grid_longitude(9), grid_latitude(10)) = [[2.004, ..., 8.156]] degrees_E

: long_name=Grid latitude name(grid_latitude(10)) = [--, ..., b'kappa']

Cell measures : measure:area(grid_longitude(9), grid_latitude(10)) = [[2391.9657, ..., 2392.6009]] km2

Coord references: grid_mapping_name:rotated_latitude_longitude

: standard_name:atmosphere_hybrid_height_coordinate

Domain ancils : ncvar%a(atmosphere_hybrid_height_coordinate(1)) = [10.0] m

: ncvar%b(atmosphere_hybrid_height_coordinate(1)) = [20.0]

: surface_altitude(grid_latitude(10), grid_longitude(9)) = [[0.0, ..., 270.0]] m

Note that time values are converted to date-times with the cftime package.

Full detail¶

The dump method of the field construct gives all

properties of all constructs, including metadata constructs and their

components, and shows the first and last values of all data arrays:

>>> q.dump()

----------------------------------

Field: specific_humidity (ncvar%q)

----------------------------------

Conventions = 'CF-1.7'

project = 'research'

standard_name = 'specific_humidity'

units = '1'

Data(latitude(5), longitude(8)) = [[0.003, ..., 0.032]] 1

Cell Method: area: mean

Domain Axis: latitude(5)

Domain Axis: longitude(8)

Domain Axis: time(1)

Dimension coordinate: latitude

standard_name = 'latitude'

units = 'degrees_north'

Data(latitude(5)) = [-75.0, ..., 75.0] degrees_north

Bounds:Data(latitude(5), 2) = [[-90.0, ..., 90.0]]

Dimension coordinate: longitude

standard_name = 'longitude'

units = 'degrees_east'

Data(longitude(8)) = [22.5, ..., 337.5] degrees_east

Bounds:Data(longitude(8), 2) = [[0.0, ..., 360.0]]

Dimension coordinate: time

standard_name = 'time'

units = 'days since 2018-12-01'

Data(time(1)) = [2019-01-01 00:00:00]

>>> t.dump()

---------------------------------

Field: air_temperature (ncvar%ta)

---------------------------------

Conventions = 'CF-1.7'

project = 'research'

standard_name = 'air_temperature'

units = 'K'

Data(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) = [[[0.0, ..., 89.0]]] K

Cell Method: grid_latitude(10): grid_longitude(9): mean where land (interval: 0.1 degrees)

Cell Method: time(1): maximum

Field Ancillary: air_temperature standard_error

standard_name = 'air_temperature standard_error'

units = 'K'

Data(grid_latitude(10), grid_longitude(9)) = [[0.76, ..., 0.32]] K

Domain Axis: atmosphere_hybrid_height_coordinate(1)

Domain Axis: grid_latitude(10)

Domain Axis: grid_longitude(9)

Domain Axis: time(1)

Dimension coordinate: atmosphere_hybrid_height_coordinate

computed_standard_name = 'altitude'

standard_name = 'atmosphere_hybrid_height_coordinate'

Data(atmosphere_hybrid_height_coordinate(1)) = [1.5]

Bounds:Data(atmosphere_hybrid_height_coordinate(1), 2) = [[1.0, 2.0]]

Dimension coordinate: grid_latitude

standard_name = 'grid_latitude'

units = 'degrees'

Data(grid_latitude(10)) = [2.2, ..., -1.76] degrees

Bounds:Data(grid_latitude(10), 2) = [[2.42, ..., -1.98]]

Dimension coordinate: grid_longitude

standard_name = 'grid_longitude'

units = 'degrees'

Data(grid_longitude(9)) = [-4.7, ..., -1.18] degrees

Bounds:Data(grid_longitude(9), 2) = [[-4.92, ..., -0.96]]

Dimension coordinate: time

standard_name = 'time'

units = 'days since 2018-12-01'

Data(time(1)) = [2019-01-01 00:00:00]

Auxiliary coordinate: latitude

standard_name = 'latitude'

units = 'degrees_N'

Data(grid_latitude(10), grid_longitude(9)) = [[53.941, ..., 50.225]] degrees_N

Auxiliary coordinate: longitude

standard_name = 'longitude'

units = 'degrees_E'

Data(grid_longitude(9), grid_latitude(10)) = [[2.004, ..., 8.156]] degrees_E

Auxiliary coordinate: long_name=Grid latitude name

long_name = 'Grid latitude name'

Data(grid_latitude(10)) = [--, ..., 'kappa']

Domain ancillary: ncvar%a

units = 'm'

Data(atmosphere_hybrid_height_coordinate(1)) = [10.0] m

Bounds:Data(atmosphere_hybrid_height_coordinate(1), 2) = [[5.0, 15.0]]

Domain ancillary: ncvar%b

Data(atmosphere_hybrid_height_coordinate(1)) = [20.0]

Bounds:Data(atmosphere_hybrid_height_coordinate(1), 2) = [[14.0, 26.0]]

Domain ancillary: surface_altitude

standard_name = 'surface_altitude'

units = 'm'

Data(grid_latitude(10), grid_longitude(9)) = [[0.0, ..., 270.0]] m

Coordinate reference: atmosphere_hybrid_height_coordinate

Coordinate conversion:computed_standard_name = altitude

Coordinate conversion:standard_name = atmosphere_hybrid_height_coordinate

Coordinate conversion:a = Domain Ancillary: ncvar%a

Coordinate conversion:b = Domain Ancillary: ncvar%b

Coordinate conversion:orog = Domain Ancillary: surface_altitude

Datum:earth_radius = 6371007

Dimension Coordinate: atmosphere_hybrid_height_coordinate

Coordinate reference: rotated_latitude_longitude

Coordinate conversion:grid_mapping_name = rotated_latitude_longitude

Coordinate conversion:grid_north_pole_latitude = 38.0

Coordinate conversion:grid_north_pole_longitude = 190.0

Datum:earth_radius = 6371007

Dimension Coordinate: grid_longitude

Dimension Coordinate: grid_latitude

Auxiliary Coordinate: longitude

Auxiliary Coordinate: latitude

Cell measure: measure:area

units = 'km2'

Data(grid_longitude(9), grid_latitude(10)) = [[2391.9657, ..., 2392.6009]] km2

File inspection with cfa¶

The description for every field constructs found in datasets also be

generated from the command line, with minimal, medium or full detail,

by using the cfa tool, for example:

$ cfa file.nc

CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K

CF Field: specific_humidity(latitude(5), longitude(8)) 1

$ cfa -1 *.nc

CF Field: specific_humidity(cf_role=timeseries_id(4), ncdim%timeseries(9))

CF Field: cell_area(ncdim%longitude(9), ncdim%latitude(10)) m2

CF Field: eastward_wind(latitude(10), longitude(9)) m s-1

CF Field: specific_humidity(latitude(5), longitude(8)) 1

CF Field: air_potential_temperature(time(120), latitude(5), longitude(8)) K

CF Field: precipitation_flux(time(1), latitude(64), longitude(128)) kg m-2 day-1

CF Field: precipitation_flux(time(2), latitude(4), longitude(5)) kg m2 s-1

CF Field: air_temperature(time(2), latitude(73), longitude(96)) K

CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K

cfa may also be used to write aggregated field constructs to

new datasets, and may be used with external

files.

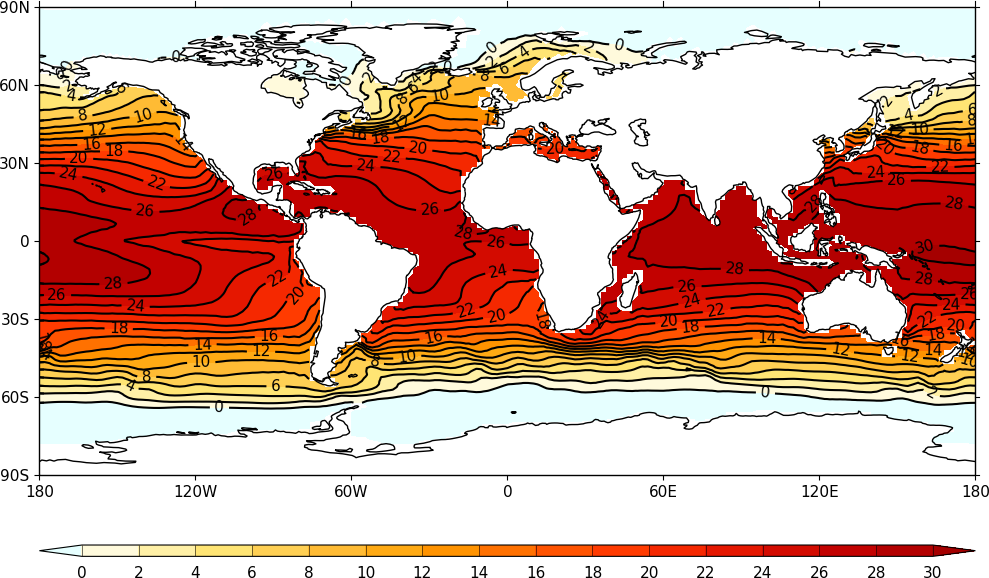

Visualization¶

Powerful, flexible, and very simple to produce visualizations of field

constructs are available with the cfplot package (that needs to be

installed separately to cf, see http://ajheaps.github.io/cf-plot for

details).

Example output of cfplot displaying a cf field construct.¶

See the cfplot gallery for the wide range of plotting possibilities, with example code. These include, but are not limited to:

Cylindrical, polar stereographic and other plane projections

Latitude or longitude vs. height or pressure

Hovmuller

Vectors

Stipples

Multiple plots on a page

Colour scales

User defined axes

Rotated pole

Irregular grids

Trajectories

Line plots

Field lists¶

A field list, contained in a cf.FieldList instance, is an ordered

sequence of field constructs. It supports all of the Python list

operations, such as indexing, iteration, and methods like

append.

>>> x = cf.read('file.nc')

>>> y = cf.read('precipitation_flux.nc')

>>> x

[<CF Field: specific_humidity(latitude(5), longitude(8)) 1>,

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>]

>>> y

[<CF Field: precipitation_flux(time(1), latitude(64), longitude(128)) kg m-2 day-1>]

>>> y.extend(x)

>>> y

[<CF Field: precipitation_flux(time(1), latitude(64), longitude(128)) kg m-2 day-1>,

<CF Field: specific_humidity(latitude(5), longitude(8)) 1>,

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>]

>>> y[2]

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>

>>> y[::-1]

[<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>,

<CF Field: specific_humidity(latitude(5), longitude(8)) 1>,

<CF Field: precipitation_flux(time(1), latitude(64), longitude(128)) kg m-2 day-1>]

>>> len(y)

3

>>> len(y + y)

6

>>> len(y * 4)

12

>>> for f in y:

... print('field:', repr(f))

...

field: <CF Field: precipitation_flux(time(1), latitude(64), longitude(128)) kg m-2 day-1>

field: <CF Field: specific_humidity(latitude(5), longitude(8)) 1>

field: <CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>

The field list also has some additional methods for copying, testing equality, sorting and selection.

Properties¶

Descriptive properties that apply to field construct as a whole may be

retrieved with the properties method:

>>> q, t = cf.read('file.nc')

>>> t.properties()

{'Conventions': 'CF-1.7',

'project': 'research',

'standard_name': 'air_temperature',

'units': 'K'}

Note

From a Python script or using the standard Python shell, the result of methods such as the property methods given here will be returned in condensed form, without linebreaks between items.

If a “pretty printed” output as displayed in these pages is preferred, use the ipython shell or wrap calls with a suitable method from Python’s dedicated pprint module.

Individual properties may be accessed and modified with the

del_property, get_property, has_property,

and set_property methods:

>>> t.has_property('standard_name')

True

>>> t.get_property('standard_name')

'air_temperature'

>>> t.del_property('standard_name')

'air_temperature'

>>> t.get_property('standard_name', default='not set')

'not set'

>>> t.set_property('standard_name', value='air_temperature')

>>> t.get_property('standard_name', default='not set')

'air_temperature'

A collection of properties may be set at the same time with the

set_properties method of the field construct, and all

properties may be completely removed with the

clear_properties method.

>>> original = t.properties()

>>> original

{'Conventions': 'CF-1.7',

'project': 'research',

'standard_name': 'air_temperature',

'units': 'K'}

>>> t.set_properties({'foo': 'bar', 'units': 'K'})

>>> t.properties()

{'Conventions': 'CF-1.7',

'foo': 'bar',

'project': 'research',

'standard_name': 'air_temperature',

'units': 'K'}

>>> t.clear_properties()

{'Conventions': 'CF-1.7',

'foo': 'bar',

'project': 'research',

'standard_name': 'air_temperature',

'units': 'K'}

>>> t.properties()

{'units': 'K'}

>>> t.set_properties(original)

>>> t.properties()

{'Conventions': 'CF-1.7',

'project': 'research',

'standard_name': 'air_temperature',

'units': 'K'}

Note that the “units” property persisted after the call to the

clear_properties method because is it deeply associated with

the field construct’s data, which still exists.

All of the methods related to the properties are listed here.

Field identities¶

A field construct identity is a string that describes the construct

and is based on the field construct’s properties. A canonical identity

is returned by the identity method of the field construct,

and all possible identities are returned by the identities

method.

A field construct’s identity may be any one of the following

The value of the

standard_nameproperty, e.g.'air_temperature',The value of the

idattribute, preceded by'id%=',The value of any property, preceded by the property name and an equals, e.g.

'long_name=Air Temperature','foo=bar', etc.,The netCDF variable name, preceded by “ncvar%”, e.g.

'ncvar%tas'(see the netCDF interface),

>>> t.identity()

'air_temperature'

>>> t.identities()

['air_temperature',

'Conventions=CF-1.7',

'project=research',

'units=K',

'standard_name=air_temperature',

'ncvar%ta']

The identity returned by the identity method is, by default,

the least ambiguous identity (defined in the method documentation),

but it may be restricted to the standard_name property and

id attribute; or also the long_name property and

netCDF variable name (see the netCDF interface). See the strict and relaxed keywords.

Metadata constructs¶

The metadata constructs describe the field construct that contains them. Each CF data model metadata construct has a corresponding cf class:

Class |

CF data model construct |

Description |

|---|---|---|

Domain axis |

Independent axes of the domain |

|

Dimension coordinate |

Domain cell locations |

|

Auxiliary coordinate |

Domain cell locations |

|

Coordinate reference |

Domain coordinate systems |

|

Domain ancillary |

Cell locations in alternative coordinate systems |

|

Cell measure |

Domain cell size or shape |

|

Field ancillary |

Ancillary metadata which vary within the domain |

|

Cell method |

Describes how data represent variation within cells |

Metadata constructs of a particular type can be retrieved with the following attributes of the field construct:

Attribute |

Metadata constructs |

|---|---|

Domain axes |

|

Dimension coordinates |

|

Auxiliary coordinates |

|

Coordinate references |

|

Domain ancillaries |

|

Cell measures |

|

Field ancillaries |

|

Cell methods |

Each of these attributes returns a cf.Constructs class instance that

maps metadata constructs to unique identifiers called “construct

keys”. A cf.Constructs instance has methods for selecting constructs

that meet particular criteria (see

Filtering metadata constructs). It also behaves like a

“read-only” Python dictionary, in that it has items,

keys and values methods that work exactly

like their corresponding dict methods. It also has a

get method and indexing like a Python dictionary (see

Metadata construct access for details).

>>> q, t = cf.read('file.nc')

>>> t.coordinate_references

<CF Constructs: coordinate_reference(2)>

>>> print(t.coordinate_references)

Constructs:

{'coordinatereference0': <CF CoordinateReference: standard_name:atmosphere_hybrid_height_coordinate>,

'coordinatereference1': <CF CoordinateReference: grid_mapping_name:rotated_latitude_longitude>}

>>> list(t.coordinate_references.keys())

['coordinatereference0', 'coordinatereference1']

>>> for key, value in t.coordinate_references.items():

... print(key, repr(value))

...

coordinatereference0 <CF CoordinateReference: standard_name:atmosphere_hybrid_height_coordinate>

coordinatereference1 <CF CoordinateReference: grid_mapping_name:rotated_latitude_longitude>

>>> print(t.dimension_coordinates)

Constructs:

{'dimensioncoordinate0': <CF DimensionCoordinate: atmosphere_hybrid_height_coordinate(1) >,

'dimensioncoordinate1': <CF DimensionCoordinate: grid_latitude(10) degrees>,

'dimensioncoordinate2': <CF DimensionCoordinate: grid_longitude(9) degrees>,

'dimensioncoordinate3': <CF DimensionCoordinate: time(1) days since 2018-12-01 >}

>>> print(t.domain_axes)

Constructs:

{'domainaxis0': <CF DomainAxis: size(1)>,

'domainaxis1': <CF DomainAxis: size(10)>,

'domainaxis2': <CF DomainAxis: size(9)>,

'domainaxis3': <CF DomainAxis: size(1)>}

The construct keys (e.g. 'domainaxis1') are usually generated

internally and are unique within the field construct. However,

construct keys may be different for equivalent metadata constructs

from different field constructs, and for different Python sessions.

Metadata constructs of all types may be returned by the

constructs attribute of the field construct:

>>> q.constructs

<CF Constructs: cell_method(1), dimension_coordinate(3), domain_axis(3)>

>>> print(q.constructs)

Constructs:

{'cellmethod0': <CF CellMethod: area: mean>,

'dimensioncoordinate0': <CF DimensionCoordinate: latitude(5) degrees_north>,

'dimensioncoordinate1': <CF DimensionCoordinate: longitude(8) degrees_east>,

'dimensioncoordinate2': <CF DimensionCoordinate: time(1) days since 2018-12-01 >,

'domainaxis0': <CF DomainAxis: size(5)>,

'domainaxis1': <CF DomainAxis: size(8)>,

'domainaxis2': <CF DomainAxis: size(1)>}

>>> t.constructs

<CF Constructs: auxiliary_coordinate(3), cell_measure(1), cell_method(2), coordinate_reference(2), dimension_coordinate(4), domain_ancillary(3), domain_axis(4), field_ancillary(1)>

>>> print(t.constructs)

Constructs:

{'auxiliarycoordinate0': <CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>,

'auxiliarycoordinate1': <CF AuxiliaryCoordinate: longitude(9, 10) degrees_E>,

'auxiliarycoordinate2': <CF AuxiliaryCoordinate: long_name=Grid latitude name(10) >,

'cellmeasure0': <CF CellMeasure: measure:area(9, 10) km2>,

'cellmethod0': <CF CellMethod: domainaxis1: domainaxis2: mean where land (interval: 0.1 degrees)>,

'cellmethod1': <CF CellMethod: domainaxis3: maximum>,

'coordinatereference0': <CF CoordinateReference: standard_name:atmosphere_hybrid_height_coordinate>,

'coordinatereference1': <CF CoordinateReference: grid_mapping_name:rotated_latitude_longitude>,

'dimensioncoordinate0': <CF DimensionCoordinate: atmosphere_hybrid_height_coordinate(1) >,

'dimensioncoordinate1': <CF DimensionCoordinate: grid_latitude(10) degrees>,

'dimensioncoordinate2': <CF DimensionCoordinate: grid_longitude(9) degrees>,

'dimensioncoordinate3': <CF DimensionCoordinate: time(1) days since 2018-12-01 >,

'domainancillary0': <CF DomainAncillary: ncvar%a(1) m>,

'domainancillary1': <CF DomainAncillary: ncvar%b(1) >,

'domainancillary2': <CF DomainAncillary: surface_altitude(10, 9) m>,

'domainaxis0': <CF DomainAxis: size(1)>,

'domainaxis1': <CF DomainAxis: size(10)>,

'domainaxis2': <CF DomainAxis: size(9)>,

'domainaxis3': <CF DomainAxis: size(1)>,

'fieldancillary0': <CF FieldAncillary: air_temperature standard_error(10, 9) K>}

Data¶

The field construct’s data is stored in a cf.Data class instance that

is accessed with the data attribute of the field construct.

>>> q, t = cf.read('file.nc')

>>> t.data

<CF Data(1, 10, 9): [[[262.8, ..., 269.7]]] K>

The cf.Data instance provides access to the full array of values, as

well as attributes to describe the array and methods for describing

any data compression. However, the field

construct (and any other construct that contains data) also provides

attributes for direct access.

>>> print(t.array)

[[[262.8 270.5 279.8 269.5 260.9 265.0 263.5 278.9 269.2]

[272.7 268.4 279.5 278.9 263.8 263.3 274.2 265.7 279.5]

[269.7 279.1 273.4 274.2 279.6 270.2 280. 272.5 263.7]

[261.7 260.6 270.8 260.3 265.6 279.4 276.9 267.6 260.6]

[264.2 275.9 262.5 264.9 264.7 270.2 270.4 268.6 275.3]

[263.9 263.8 272.1 263.7 272.2 264.2 260. 263.5 270.2]

[273.8 273.1 268.5 272.3 264.3 278.7 270.6 273.0 270.6]

[267.9 273.5 279.8 260.3 261.2 275.3 271.2 260.8 268.9]

[270.9 278.7 273.2 261.7 271.6 265.8 273. 278.5 266.4]

[276.4 264.2 276.3 266.1 276.1 268.1 277. 273.4 269.7]]]

>>> t.dtype

dtype('float64')

>>> t.ndim

3

>>> t.shape

(1, 10, 9)

>>> t.size

90

The field construct also has a get_data method as an

alternative means of retrieving the data instance, which allows for a

default to be returned if no data have been set; as well as a

del_data method for removing the data.

All of the methods and attributes related to the data are listed here.

Data axes¶

The data array of the field construct spans all the domain axis

constructs with the possible exception of size one domain axis

constructs. The domain axis constructs spanned by the field

construct’s data are found with the get_data_axes method of

the field construct. For example, the data of the field construct

t does not span the size one domain axis construct with key

'domainaxis3'.

>>> print(t.domain_axes)

Constructs:

{'domainaxis0': <CF DomainAxis: size(1)>,

'domainaxis1': <CF DomainAxis: size(10)>,

'domainaxis2': <CF DomainAxis: size(9)>,

'domainaxis3': <CF DomainAxis: size(1)>}

>>> t

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>

>>> t.data.shape

(1, 10, 9)

>>> t.get_data_axes()

('domainaxis0', 'domainaxis1', 'domainaxis2')

The data may be set with the set_data method of the field

construct. The domain axis constructs spanned by the data are inferred

from the existing domain axis constructs, provided that there are no

ambiguities (such as two dimensions of the same size), in which case

they can be explicitly provided via their construct keys. In any case,

the data axes may be set at any time with the set_data_axes

method of the field construct.

>>> data = t.del_data()

>>> t.has_data()

False

>>> t.set_data(data)

>>> t.data

<CF Data(1, 10, 9): [[[262.8, ..., 269.7]]] K>

See the section field construct creation for more examples.

Date-time¶

Data representing date-times is defined as elapsed times since a

reference date-time in a particular calendar (Gregorian, by

default). The array attribute of the cf.Data instance

(and any construct that contains it) returns the elapsed times, and

the datetime_array (and any construct that contains it)

returns the data as an array of date-time objects.

>>> d = cf.Data([1, 2, 3], units='days since 2004-2-28')

>>> print(d.array)

[1 2 3]

>>> print(d.datetime_array)

[cftime.DatetimeGregorian(2004-02-29 00:00:00)

cftime.DatetimeGregorian(2004-03-01 00:00:00)

cftime.DatetimeGregorian(2004-03-02 00:00:00)]

>>> e = cf.Data([1, 2, 3], units='days since 2004-2-28', calendar='360_day')

>>> print(e.array)

[1 2 3]

>>> print(e.datetime_array)

[cftime.Datetime360Day(2004-02-29 00:00:00)

cftime.Datetime360Day(2004-02-30 00:00:00)

cftime.Datetime360Day(2004-03-01 00:00:00)]

Alternatively, date-time data may be created by providing date-time

objects or ISO 8601-like date-time strings. Date-time objects may be

cftime.datetime instances (as returned by the cf.dt and

cf.dt_vector functions), Python datetime.datetime instances, or

any other date-time object that has an equivalent API.

>>> date_time = cf.dt(2004, 2, 29)

>>> date_time

cftime.datetime(2004-02-29 00:00:00)

>>> d = cf.Data(date_time, calendar='gregorian')

>>> print(d.array)

0.0

>>> d.datetime_array

array(cftime.DatetimeGregorian(2004-02-29 00:00:00), dtype=object)

>>> date_times = cf.dt_vector(['2004-02-29', '2004-02-30', '2004-03-01'], calendar='360_day')

>>> print (date_times)

[cftime.Datetime360Day(2004-02-29 00:00:00)

cftime.Datetime360Day(2004-02-30 00:00:00)

cftime.Datetime360Day(2004-03-01 00:00:00)]

>>> e = cf.Data(date_times)

>>> print(e.array)

[0. 1. 2.]

>>> print(e.datetime_array)

[cftime.Datetime360Day(2004-02-29 00:00:00)

cftime.Datetime360Day(2004-02-30 00:00:00)

cftime.Datetime360Day(2004-03-01 00:00:00)]

>>> d = cf.Data(['2004-02-29', '2004-02-30', '2004-03-01'], calendar='360_day')

>>> d.Units

<Units: days since 2004-02-29 360_day>

>>> print(d.array)

[0. 1. 2.]

>>> print(d.datetime_array)

[cftime.Datetime360Day(2004-02-29 00:00:00)

cftime.Datetime360Day(2004-02-30 00:00:00)

cftime.Datetime360Day(2004-03-01 00:00:00)]

>>> e = cf.Data(['2004-02-29', '2004-03-01', '2004-03-02'], dt=True)

>>> e.Units

<Units: days since 2004-02-29>

>>> print(e.datetime_array)

[cftime.DatetimeGregorian(2004-02-29 00:00:00)

cftime.DatetimeGregorian(2004-03-01 00:00:00)

cftime.DatetimeGregorian(2004-03-02 00:00:00)]

>>> f = cf.Data(['2004-02-29', '2004-03-01', '2004-03-02'])

>>> print(f.array)

['2004-02-29' '2004-03-01' '2004-03-02']

>>> f.Units

<Units: >

>>> print(f.datetime_array) # Raises Exception

Traceback (most recent call last):

...

ValueError: Can't create date-time array from units <Units: >

Manipulating dimensions¶

The dimensions of a field construct’s data may be reordered, have size one dimensions removed and have new new size one dimensions included by using the following field construct methods:

Method |

Description |

|---|---|

Flatten domain axes of the field construct |

|

Reverse the direction of a data dimension |

|

Insert a new size one data dimension. The new dimension must correspond to an existing size one domain axis construct. |

|

Remove size one data dimensions |

|

Reorder data dimensions |

|

Insert all missing size one data dimensions |

>>> q, t = cf.read('file.nc')

>>> t

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>

>>> t2 = t.squeeze()

>>> t2

<CF Field: air_temperature(grid_latitude(10), grid_longitude(9)) K>

>>> print(t2.dimension_coordinates)

Constructs:

{'dimensioncoordinate0': <CF DimensionCoordinate: atmosphere_hybrid_height_coordinate(1) >,

'dimensioncoordinate1': <CF DimensionCoordinate: grid_latitude(10) degrees>,

'dimensioncoordinate2': <CF DimensionCoordinate: grid_longitude(9) degrees>,

'dimensioncoordinate3': <CF DimensionCoordinate: time(1) days since 2018-12-01 >}

>>> t3 = t2.insert_dimension(axis='domainaxis3', position=1)

>>> t3

<CF Field: air_temperature(grid_latitude(10), time(1), grid_longitude(9)) K>

>>> t3.transpose([2, 0, 1])

<CF Field: air_temperature(grid_longitude(9), grid_latitude(10), time(1)) K>

When transposing the data dimensions, the dimensions of metadata construct data are, by default, unchanged. It is also possible to permute the data dimensions of the metadata constructs so that they have the same relative order as the field construct:

>>> t4 = t.transpose(['X', 'Z', 'Y'], constructs=True)

Data mask¶

There is always a data mask, which may be thought of as a separate

data array of booleans with the same shape as the original data. The

data mask is False where the the data has values, and True where

the data is missing. The data mask may be inspected with the

mask attribute of the field construct, which returns the data

mask in a field construct with the same metadata constructs as the

original field construct.

>>> print(q)

Field: specific_humidity (ncvar%q)

----------------------------------

Data : specific_humidity(latitude(5), longitude(8)) 1

Cell methods : area: mean

Dimension coords: latitude(5) = [-75.0, ..., 75.0] degrees_north

: longitude(8) = [22.5, ..., 337.5] degrees_east

: time(1) = [2019-01-01 00:00:00]

>>> print(q.mask)

Field: long_name=mask

---------------------

Data : long_name=mask(latitude(5), longitude(8))

Cell methods : area: mean

Dimension coords: latitude(5) = [-75.0, ..., 75.0] degrees_north

: longitude(8) = [22.5, ..., 337.5] degrees_east

: time(1) = [2019-01-01 00:00:00]

>>> print(q.mask.array)

[[False False False False False False False False]

[False False False False False False False False]

[False False False False False False False False]

[False False False False False False False False]

[False False False False False False False False]]

>>> q[[0, 4], :] = cf.masked

>>> print(q.mask.array)

[[ True True True True True True True True]

[False False False False False False False False]

[False False False False False False False False]

[False False False False False False False False]

[ True True True True True True True True]]

The _FillValue and missing_value attributes of the

field construct are not stored as values of the field construct’s

data. They are only used when writing the data to a netCDF

dataset. Therefore testing for missing

data by testing for equality to one of these property values will

produce incorrect results; the any and all methods

of the field construct should be used instead.

>>> q.mask.all()

False

>>> q.mask.any()

True

Subspacing by index¶

Creation of a new field construct which spans a subspace of the domain of an existing field construct is achieved either by indexing the field construct directly (as described in this section) or by identifying indices based on the metadata constructs (see Subspacing by metadata). The subspacing operation, in either case, also subspaces any metadata constructs of the field construct (e.g. coordinate metadata constructs) which span any of the domain axis constructs that are affected. The new field construct is created with the same properties as the original field construct.

Subspacing by indexing uses rules that are very similar to the numpy indexing rules, the only differences being:

An integer index i specified for a dimension reduces the size of this dimension to unity, taking just the i-th element, but keeps the dimension itself, so that the rank of the array is not reduced.

When two or more dimensions’ indices are sequences of integers then these indices work independently along each dimension (similar to the way vector subscripts work in Fortran). This is the same indexing behaviour as on a

Variableobject of the netCDF4 package.

For a dimension that is cyclic, a range of indices specified by a

slicethat spans the edges of the data (such as-2:3or3:-2:-1) is assumed to “wrap” around, rather then producing a null result.

>>> q, t = cf.read('file.nc')

>>> print(q)

Field: specific_humidity (ncvar%q)

----------------------------------

Data : specific_humidity(latitude(5), longitude(8)) 1

Cell methods : area: mean

Dimension coords: time(1) = [2019-01-01 00:00:00]

: latitude(5) = [-75.0, ..., 75.0] degrees_north

: longitude(8) = [22.5, ..., 337.5] degrees_east

>>> new = q[::-1, 0]

>>> print(new)

Field: specific_humidity (ncvar%q)

----------------------------------

Data : specific_humidity(latitude(5), longitude(1)) 1

Cell methods : area: mean

Dimension coords: time(1) = [2019-01-01 00:00:00]

: latitude(5) = [75.0, ..., -75.0] degrees_north

: longitude(1) = [22.5] degrees_east

>>> t

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K>

>>> t[:, :, 1]

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(1)) K>

>>> t[:, 0]

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(1), grid_longitude(9)) K>

>>> t[..., 6:3:-1, 3:6]

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(3), grid_longitude(3)) K>

>>> t[0, [2, 3, 9], [4, 8]]

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(3), grid_longitude(2)) K>

>>> t[0, :, -2]

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(1)) K>

>>> t[..., [True, False, True, True, False, False, True, False, False]]

<CF Field: air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(4)) K>

>>> q

<CF Field: specific_humidity(latitude(5), longitude(8)) 1>

>>> q.cyclic()

{'domainaxis1'}

>>> q.constructs.domain_axis_identity('domainaxis1')

'longitude'

>>> print(q[:, -2:3])

Field: specific_humidity (ncvar%q)

----------------------------------

Data : specific_humidity(latitude(5), longitude(5)) 1

Cell methods : area: mean

Dimension coords: latitude(5) = [-75.0, ..., 75.0] degrees_north

: longitude(5) = [-67.5, ..., 112.5] degrees_east

: time(1) = [2019-01-01 00:00:00]

>>> print(q[:, 3:-2:-1])

Field: specific_humidity (ncvar%q)

----------------------------------

Data : specific_humidity(latitude(5), longitude(5)) 1

Cell methods : area: mean

Dimension coords: latitude(5) = [-75.0, ..., 75.0] degrees_north

: longitude(5) = [157.5, ..., -22.5] degrees_east

: time(1) = [2019-01-01 00:00:00]

A cf.Data instance can also directly be indexed in the same way:

>>> t.data[0, [2, 3, 9], [4, 8]]

<CF Data(1, 3, 2): [[[279.6, ..., 269.7]]] K>

Assignment by index¶

Data elements can be changed by assigning to elements selected by indices of the data (as described in this section); by conditions based on the data values of the field construct or one of its metadata constructs (see Assignment by condition); or by identifying indices based on arbitrary metadata constructs (see Assignment by metadata).

Assignment by indices uses rules that are very similar to the numpy indexing rules, the only difference being:

When two or more dimensions’ indices are sequences of integers then these indices work independently along each dimension (similar to the way vector subscripts work in Fortran). This is the same indexing behaviour as on a

Variableobject of the netCDF4 package.

For a dimension that is cyclic, a range of indices specified by a

slicethat spans the edges of the data (such as-2:3or3:-2:-1) is assumed to “wrap” around, rather then producing a null result.

A single value may be assigned to any number of elements.

>>> q, t = cf.read('file.nc')

>>> t[:, 0, 0] = -1

>>> t[:, :, 1] = -2

>>> t[..., 6:3:-1, 3:6] = -3

>>> print(t.array)

[[[ -1.0 -2.0 279.8 269.5 260.9 265.0 263.5 278.9 269.2]

[272.7 -2.0 279.5 278.9 263.8 263.3 274.2 265.7 279.5]

[269.7 -2.0 273.4 274.2 279.6 270.2 280.0 272.5 263.7]

[261.7 -2.0 270.8 260.3 265.6 279.4 276.9 267.6 260.6]

[264.2 -2.0 262.5 -3.0 -3.0 -3.0 270.4 268.6 275.3]

[263.9 -2.0 272.1 -3.0 -3.0 -3.0 260.0 263.5 270.2]

[273.8 -2.0 268.5 -3.0 -3.0 -3.0 270.6 273.0 270.6]

[267.9 -2.0 279.8 260.3 261.2 275.3 271.2 260.8 268.9]

[270.9 -2.0 273.2 261.7 271.6 265.8 273.0 278.5 266.4]

[276.4 -2.0 276.3 266.1 276.1 268.1 277.0 273.4 269.7]]]

An array of values can be assigned, as long as it is broadcastable to the shape defined by the indices, using the numpy broadcasting rules.

>>> import numpy

>>> t[..., 6:3:-1, 3:6] = numpy.arange(9).reshape(3, 3)

>>> t[0, [2, 9], [4, 8]] = cf.Data([[-4, -5]])

>>> t[0, [4, 7], 0] = [[-10], [-11]]

>>> print(t.array)

[[[ -1.0 -2.0 279.8 269.5 260.9 265.0 263.5 278.9 269.2]

[272.7 -2.0 279.5 278.9 263.8 263.3 274.2 265.7 279.5]

[269.7 -2.0 273.4 274.2 -4.0 270.2 280.0 272.5 -5.0]

[261.7 -2.0 270.8 260.3 265.6 279.4 276.9 267.6 260.6]

[-10.0 -2.0 262.5 6.0 7.0 8.0 270.4 268.6 275.3]

[263.9 -2.0 272.1 3.0 4.0 5.0 260.0 263.5 270.2]

[273.8 -2.0 268.5 0.0 1.0 2.0 270.6 273.0 270.6]

[-11.0 -2.0 279.8 260.3 261.2 275.3 271.2 260.8 268.9]

[270.9 -2.0 273.2 261.7 271.6 265.8 273.0 278.5 266.4]

[276.4 -2.0 276.3 266.1 -4.0 268.1 277.0 273.4 -5.0]]]

In-place modification is also possible:

>>> print(t[0, 0, -1].array)

[[[269.2]]]

>>> t[0, 0, -1] /= -10

>>> print(t[0, 0, -1].array)

[[[-26.92]]]

A cf.Data instance can also assigned values in the same way:

>>> t.data[0, 0, -1] = -99

>>> print(t[0, 0, -1].array)

[[[-99.]]]

Masked values¶

Data array elements may be set to masked values by assigning them to

the cf.masked constant, thereby updating the the data mask.

>>> t[0, :, -2] = cf.masked

>>> print(t.array)

[[[ -1.0 -2.0 279.8 269.5 260.9 265.0 263.5 -- -99.0]

[272.7 -2.0 279.5 278.9 263.8 263.3 274.2 -- 279.5]

[269.7 -2.0 273.4 274.2 -4.0 270.2 280.0 -- -5.0]

[261.7 -2.0 270.8 260.3 265.6 279.4 276.9 -- 260.6]

[264.2 -2.0 262.5 6.0 7.0 8.0 270.4 -- 275.3]

[263.9 -2.0 272.1 3.0 4.0 5.0 260.0 -- 270.2]

[273.8 -2.0 268.5 0.0 1.0 2.0 270.6 -- 270.6]

[-11.0 -2.0 279.8 260.3 261.2 275.3 271.2 -- 268.9]

[270.9 -2.0 273.2 261.7 271.6 265.8 273.0 -- 266.4]

[276.4 -2.0 276.3 266.1 -4.0 268.1 277.0 -- -5.0]]]

By default the data mask is “hard”, meaning that masked values can not

be changed by assigning them to another value. This behaviour may be

changed by setting the hardmask attribute of the field

construct to False, thereby making the data mask “soft”.

>>> t[0, 4, -2] = 99

>>> print(t[0, 4, -2].array)

[[[--]]]

>>> t.hardmask = False

>>> t[0, 4, -2] = 99

>>> print(t[0, 4, -2].array)

[[[99.]]]

Note that this is the opposite behaviour to numpy arrays, which

assume that the mask is soft by default. The

reason for the difference is so that land-sea masks are, by default,

preserved through assignment operations.

Assignment from other field constructs¶

Another field construct can also be assigned to indices. The other field construct’s data is actually assigned, but only after being transformed so that it is broadcastable to the subspace defined by the assignment indices. This is done by using the metadata constructs of the two field constructs to create a mapping of physically compatible dimensions between the fields, and then manipulating the dimensions of the other field construct’s data to ensure that they are broadcastable.

>>> q, t = cf.read('file.nc')

>>> t0 = t.copy()

>>> u = t.squeeze(0)

>>> u.transpose(inplace=True)

>>> u.flip(inplace=True)

>>> t[...] = u

>>> t.allclose(t0)

True

>>> print(t[:, :, 1:3].array)

[[[270.5 279.8]

[268.4 279.5]

[279.1 273.4]

[260.6 270.8]

[275.9 262.5]

[263.8 272.1]

[273.1 268.5]

[273.5 279.8]

[278.7 273.2]

[264.2 276.3]]]

>>> print(u[2].array)

[[277. 273. 271.2 270.6 260. 270.4 276.9 280. 274.2 263.5]]

>>> t[:, :, 1:3] = u[2]

>>> print(t[:, :, 1:3].array)

[[[263.5 263.5]

[274.2 274.2]

[280. 280. ]

[276.9 276.9]

[270.4 270.4]

[260. 260. ]

[270.6 270.6]

[271.2 271.2]

[273. 273. ]

[277. 277. ]]]

If either of the field constructs does not have sufficient metadata to

create the such a mapping, then any manipulation of the dimensions

must be done manually, and the other field construct’s cf.Data instance

(rather than the field construct itself) may be assigned.

Units¶

The field construct, and any metadata construct that contains data,

has units which are described by the Units attribute that

stores a cf.Units object (which is identical to a cfunits.Units

object of the cfunits package). The units property

provides the units contained in the cf.Units instance, and changes

in one are reflected in the other.

>>> q, t = cf.read('file.nc')

>>> t.units

'K'

>>> t.Units

<Units: K>

>>> t.units = 'degreesC'

>>> t.units

'degreesC'

>>> t.Units

<Units: degreesC>

>>> t.Units += 273.15

>>> t.units

'K'

>>> t.Units

<Units: K>

When the units are changed, the data are automatically converted to the new units when next accessed.

>>> t.data

<CF Data(1, 10, 9): [[[262.8, ..., 269.7]]] K>

>>> t.Units = cf.Units('degreesC')

>>> t.data

<CF Data(1, 10, 9): [[[-10.35, ..., -3.45]]] degreesC>

>>> t.units = 'Kelvin'

>>> t.data

<CF Data(1, 10, 9): [[[262.8, ..., 269.7]]] Kelvin>

When assigning to the data with values that have units, the values are automatically converted to have the same units as the data.

>>> t.data

<CF Data(1, 10, 9): [[[262.8, ..., 269.7]]] Kelvin>

>>> t[0, 0, 0] = cf.Data(1)

>>> t.data

<CF Data(1, 10, 9): [[[1.0, ..., 269.7]]] Kelvin>

>>> t[0, 0, 0] = cf.Data(1, 'degreesC')

>>> t.data

<CF Data(1, 10, 9): [[[274.15, ..., 269.7]]] Kelvin>

Automatic units conversions are also carried out between operands during mathematical operations.

Calendar¶

When the data represents date-times, the cf.Units instance describes

both the units and calendar of the data. If the latter is missing then

the Gregorian calender is assumed, as per the CF conventions. The

calendar property provides the calendar contained in the

cf.Units instance, and changes in one are reflected in the other.

>>> air_temp = cf.read('air_temperature.nc')[0]

>>> time = air_temp.coordinate('time')

>>> time.units

'days since 1860-1-1'

>>> time.calendar

'360_day'

>>> time.Units

<Units: days since 1860-1-1 360_day>

Filtering metadata constructs¶

A cf.Constructs instance has filtering methods for selecting

constructs that meet various criteria:

Method |

Filter criteria |

|---|---|

Metadata construct identity |

|

Metadata construct type |

|

Property values |

|

The domain axis constructs spanned by the data |

|

The number of domain axis constructs spanned by the data |

|

The size domain axis constructs |

|

Measure value (for cell measure constructs) |

|

Method value (for cell method constructs) |

|

Whether or not there could be be data. |

|

Construct key |

|

NetCDF variable name (see the netCDF interface) |

|

NetCDF dimension name (see the netCDF interface) |

Each of these methods returns a new cf.Constructs instance that

contains the selected metadata constructs.

>>> q, t = cf.read('file.nc')

>>> print(t.constructs.filter_by_type('dimension_coordinate'))

Constructs:

{'dimensioncoordinate0': <CF DimensionCoordinate: atmosphere_hybrid_height_coordinate(1) >,

'dimensioncoordinate1': <CF DimensionCoordinate: grid_latitude(10) degrees>,

'dimensioncoordinate2': <CF DimensionCoordinate: grid_longitude(9) degrees>,

'dimensioncoordinate3': <CF DimensionCoordinate: time(1) days since 2018-12-01 >}

>>> print(t.constructs.filter_by_type('cell_method', 'field_ancillary'))

Constructs:

{'cellmethod0': <CF CellMethod: domainaxis1: domainaxis2: mean where land (interval: 0.1 degrees)>,

'cellmethod1': <CF CellMethod: domainaxis3: maximum>,

'fieldancillary0': <CF FieldAncillary: air_temperature standard_error(10, 9) K>}

>>> print(t.constructs.filter_by_property(

... standard_name='air_temperature standard_error'))

Constructs:

{'fieldancillary0': <CF FieldAncillary: air_temperature standard_error(10, 9) K>}

>>> print(t.constructs.filter_by_property(

... standard_name='air_temperature standard_error',

... units='K'))

Constructs:

{'fieldancillary0': <CF FieldAncillary: air_temperature standard_error(10, 9) K>}

>>> print(t.constructs.filter_by_property(

... 'or',

... standard_name='air_temperature standard_error',

... units='m'))

Constructs:

{'domainancillary0': <CF DomainAncillary: ncvar%a(1) m>,

'domainancillary2': <CF DomainAncillary: surface_altitude(10, 9) m>,

'fieldancillary0': <CF FieldAncillary: air_temperature standard_error(10, 9) K>}

>>> print(t.constructs.filter_by_axis('and', 'domainaxis1'))

Constructs:

{'auxiliarycoordinate0': <CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>,

'auxiliarycoordinate1': <CF AuxiliaryCoordinate: longitude(9, 10) degrees_E>,

'auxiliarycoordinate2': <CF AuxiliaryCoordinate: long_name=Grid latitude name(10) >,

'cellmeasure0': <CF CellMeasure: measure:area(9, 10) km2>,

'dimensioncoordinate1': <CF DimensionCoordinate: grid_latitude(10) degrees>,

'domainancillary2': <CF DomainAncillary: surface_altitude(10, 9) m>,

'fieldancillary0': <CF FieldAncillary: air_temperature standard_error(10, 9) K>}

>>> print(t.constructs.filter_by_measure('area'))

Constructs:

{'cellmeasure0': <CF CellMeasure: measure:area(9, 10) km2>}

>>> print(t.constructs.filter_by_method('maximum'))

Constructs:

{'cellmethod1': <CF CellMethod: domainaxis3: maximum>}

As each of these methods returns a cf.Constructs instance, it is

easy to perform further filters on their results:

>>> print(t.constructs.filter_by_type('auxiliary_coordinate').filter_by_axis('and', 'domainaxis2'))

Constructs:

{'auxiliarycoordinate0': <CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>,

'auxiliarycoordinate1': <CF AuxiliaryCoordinate: longitude(9, 10) degrees_E>}

>>> c = t.constructs.filter_by_type('dimension_coordinate')

>>> d = c.filter_by_property(units='degrees')

>>> print(d)

Constructs:

{'dimensioncoordinate1': <CF DimensionCoordinate: grid_latitude(10) degrees>,

'dimensioncoordinate2': <CF DimensionCoordinate: grid_longitude(9) degrees>}

Construct identities¶

Another method of selection is by metadata construct “identity”.

Construct identities are used to describe constructs when they are

inspected, and so it is often convenient to copy these identities when

selecting metadata constructs. For example, the three auxiliary

coordinate constructs in the field construct t

have identities 'latitude', 'longitude' and 'long_name=Grid

latitude name'.

A construct’s identity may be any one of the following

The value of the

standard_nameproperty, e.g.'air_temperature',The value of the

idattribute, preceded by'id%=',The physical nature of the construct denoted by

'X','Y','Z'or'T', denoting longitude (or x-projection), latitude (or y-projection), vertical and temporal constructs respectively,The value of any property, preceded by the property name and an equals, e.g.

'long_name=Air Temperature','axis=X','foo=bar', etc.,The cell measure, preceded by “measure:”, e.g.

'measure:volume'The cell method, preceded by “method:”, e.g.

'method:maximum'The netCDF variable name, preceded by “ncvar%”, e.g.

'ncvar%tas'(see the netCDF interface),The netCDF dimension name, preceded by “ncdim%” e.g.

'ncdim%z'(see the netCDF interface), andThe construct key, optionally proceeded by “key%”, e.g.

'auxiliarycoordinate2'or'key%auxiliarycoordinate2'.

>>> print(t)

Field: air_temperature (ncvar%ta)

---------------------------------

Data : air_temperature(atmosphere_hybrid_height_coordinate(1), grid_latitude(10), grid_longitude(9)) K

Cell methods : grid_latitude(10): grid_longitude(9): mean where land (interval: 0.1 degrees) time(1): maximum

Field ancils : air_temperature standard_error(grid_latitude(10), grid_longitude(9)) = [[0.81, ..., 0.78]] K

Dimension coords: time(1) = [2019-01-01 00:00:00]

: atmosphere_hybrid_height_coordinate(1) = [1.5]

: grid_latitude(10) = [2.2, ..., -1.76] degrees

: grid_longitude(9) = [-4.7, ..., -1.18] degrees

Auxiliary coords: latitude(grid_latitude(10), grid_longitude(9)) = [[53.941, ..., 50.225]] degrees_N

: longitude(grid_longitude(9), grid_latitude(10)) = [[2.004, ..., 8.156]] degrees_E

: long_name=Grid latitude name(grid_latitude(10)) = [--, ..., 'kappa']

Cell measures : measure:area(grid_longitude(9), grid_latitude(10)) = [[2391.9657, ..., 2392.6009]] km2

Coord references: grid_mappng_name:rotated_latitude_longitude

: standard_name:atmosphere_hybrid_height_coordinate

Domain ancils : ncvar%a(atmosphere_hybrid_height_coordinate(1)) = [10.0] m

: ncvar%b(atmosphere_hybrid_height_coordinate(1)) = [20.0]

: surface_altitude(grid_latitude(10), grid_longitude(9)) = [[0.0, ..., 270.0]] m

>>> print(t.constructs.filter_by_identity('X'))

Constructs:

{'auxiliarycoordinate1': <CF AuxiliaryCoordinate: longitude(9, 10) degrees_E>,

'dimensioncoordinate2': <CF DimensionCoordinate: grid_longitude(9) degrees>}

>>> print(t.constructs.filter_by_identity('latitude'))

Constructs:

{'auxiliarycoordinate0': <CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>}

>>> print(t.constructs.filter_by_identity('long_name=Grid latitude name'))

Constructs:

{'auxiliarycoordinate2': <CF AuxiliaryCoordinate: long_name=Grid latitude name(10) >}

>>> print(t.constructs.filter_by_identity('measure:area'))

Constructs:

{'cellmeasure0': <CF CellMeasure: measure:area(9, 10) km2>}

>>> print(t.constructs.filter_by_identity('ncvar%b'))

Constructs:

{'domainancillary1': <CF DomainAncillary: ncvar%b(1) >}

The identity returned by the identity method is, by default, the

least ambiguous identity (defined in the method documentation), but it

may be restricted in various ways. See the strict and relaxed

keywords.

As a further convenience, selection by construct identity is also

possible by providing identities to a call of a cf.Constructs

instance itself, and this technique for selecting constructs by

identity will be used in the rest of this tutorial:

>>> print(t.constructs.filter_by_identity('latitude'))

Constructs:

{'auxiliarycoordinate0': <CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>}

>>> print(t.constructs('latitude'))

Constructs:

{'auxiliarycoordinate0': <CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>}

Selection by construct key is useful for systematic metadata construct access, or for when a metadata construct is not identifiable by other means:

>>> print(t.constructs.filter_by_key('domainancillary2'))

Constructs:

{'domainancillary2': <CF DomainAncillary: surface_altitude(10, 9) m>}

>>> print(t.constructs.filter_by_key('cellmethod1'))

Constructs:

{'cellmethod1': <CF CellMethod: domainaxis3: maximum>}

>>> print(t.constructs.filter_by_key('auxiliarycoordinate2', 'cellmeasure0'))

Constructs:

{'auxiliarycoordinate2': <CF AuxiliaryCoordinate: long_name=Grid latitude name(10) >,

'cellmeasure0': <CF CellMeasure: measure:area(9, 10) km2>}

If no constructs match the given criteria, then an “empty”

cf.Constructs instance is returned:

>>> c = t.constructs('radiation_wavelength')

>>> c

<CF Constructs: >

>>> print(c)

Constructs:

{}

>>> len(c)

0

The constructs that were not selected by a filter may be returned by

the inverse_filter method applied to the results of

filters:

>>> c = t.constructs.filter_by_type('auxiliary_coordinate')

>>> c

<CF Constructs: auxiliary_coordinate(3)>

>>> c.inverse_filter()

<CF Constructs: cell_measure(1), cell_method(2), coordinate_reference(2), dimension_coordinate(4), domain_ancillary(3), domain_axis(4), field_ancillary(1)>

Note that selection by construct type is equivalent to using the particular method of the field construct for retrieving that type of metadata construct:

>>> print(t.constructs.filter_by_type('cell_measure'))

Constructs:

{'cellmeasure0': <CF CellMeasure: measure:area(9, 10) km2>}

>>> print(t.cell_measures)

Constructs:

{'cellmeasure0': <CF CellMeasure: measure:area(9, 10) km2>}

Metadata construct access¶

An individual metadata construct, or its construct key, may be returned by any of the following techniques:

with the

constructmethod of a field construct,

>>> t.construct('latitude')

<CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>

>>> t.construct('latitude', key=True)

'auxiliarycoordinate0'

with the

construct_keyandget_constructmethods of a field construct:

>>> key = t.construct_key('latitude')

>>> t.get_construct(key)

<CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>

with the

valuemethod of acf.Constructsinstance that contains one construct,

>>> t.constructs('latitude').value()

<CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>

with the

getmethod of acf.Constructsinstance, or

>>> t.constructs.get(key)

<CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>

by indexing a

cf.Constructsinstance with a construct key.

>>> t.constructs[key]

<CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>

In addition, an individual metadata construct of a particular type can be retrieved with the following methods of the field construct:

Method |

Metadata construct |

|---|---|

Domain axis |

|

Dimension coordinate |

|

Auxiliary coordinate |

|

Coordinate reference |

|

Domain ancillary |

|

Cell measure |

|

Field ancillary |

|

Cell method |

These methods will only look for the given identity amongst constructs of the chosen type.

>>> t.auxiliary_coordinate('latitude')

<CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>

>>> t.auxiliary_coordinate('latitude', key=True)

'auxiliarycoordinate0'

The construct method of the field construct, the above

methods for finding a construct of a particular type, and the

value method of the cf.Constructs instance will all

raise an exception of there is not a unique metadata construct to

return, but this may be replaced with returning a default value or

raising a customised exception:

>>> t.construct('measure:volume') # Raises Exception

Traceback (most recent call last):

...

ValueError: Can't return zero constructs

>>> t.construct('measure:volume', False)

False

>>> c = t.constructs.filter_by_measure('volume')

>>> len(c)

0

>>> c.value() # Raises Exception

Traceback (most recent call last):

...

ValueError: Can't return zero constructs

>>> c.value(default='No construct')

'No construct'

>>> c.value(default=KeyError('My message')) # Raises Exception

Traceback (most recent call last):

...

KeyError: 'My message'

>>> d = t.constructs('units=degrees')

>>> len(d)

2

>>> d.value() # Raises Exception

Traceback (most recent call last):

...

ValueError: Can't return 2 constructs

>>> print(d.value(default=None))

None

The get method of a cf.Constructs instance accepts an

optional second argument to be returned if the construct key does not

exist, exactly like the Python dict.get method.

Metadata construct properties¶

Metadata constructs share the same API as the field construct for accessing their properties:

>>> lon = q.construct('longitude')

>>> lon

<CF DimensionCoordinate: longitude(8) degrees_east>

>>> lon.set_property('long_name', 'Longitude')

>>> lon.properties()

{'units': 'degrees_east',

'long_name': 'Longitude',

'standard_name': 'longitude'}

>>> area = t.constructs.filter_by_property(units='km2').value()

>>> area

<CF CellMeasure: measure:area(9, 10) km2>

>>> area.identity()

'measure:area'

>>> area.identities()

['measure:area', 'units=km2', 'ncvar%cell_measure']

Metadata construct data¶

Metadata constructs share the a similar API as the field construct as the field construct for accessing their data:

>>> lon = q.constructs('longitude').value()

>>> lon

<CF DimensionCoordinate: longitude(8) degrees_east>

>>> lon.data

<CF Data(8): [22.5, ..., 337.5] degrees_east>

>>> lon.data[2]

<CF Data(1): [112.5] degrees_east>

>>> lon.data[2] = 133.33

>>> print(lon.array)

[ 22.5 67.5 133.33 157.5 202.5 247.5 292.5 337.5 ]

>>> lon.data[2] = 112.5

The domain axis constructs spanned by a particular metadata

construct’s data are found with the get_data_axes method

of the field construct:

>>> key = t.construct_key('latitude')

>>> key

'auxiliarycoordinate0'

>>> t.get_data_axes(key)

('domainaxis1', 'domainaxis2')

The domain axis constructs spanned by all the data of all metadata

construct may be found with the data_axes method of the

field construct’s cf.Constructs instance:

>>> t.constructs.data_axes()

{'auxiliarycoordinate0': ('domainaxis1', 'domainaxis2'),

'auxiliarycoordinate1': ('domainaxis2', 'domainaxis1'),

'auxiliarycoordinate2': ('domainaxis1',),

'cellmeasure0': ('domainaxis2', 'domainaxis1'),

'dimensioncoordinate0': ('domainaxis0',),

'dimensioncoordinate1': ('domainaxis1',),

'dimensioncoordinate2': ('domainaxis2',),

'dimensioncoordinate3': ('domainaxis3',),

'domainancillary0': ('domainaxis0',),

'domainancillary1': ('domainaxis0',),

'domainancillary2': ('domainaxis1', 'domainaxis2'),

'fieldancillary0': ('domainaxis1', 'domainaxis2')}

A size one domain axis construct that is not spanned by the field

construct’s data may still be spanned by the data of metadata

constructs. For example, the data of the field construct t

does not span the size one domain axis construct

with key 'domainaxis3', but this domain axis construct is spanned

by a “time” dimension coordinate construct (with key

'dimensioncoordinate3'). Such a dimension coordinate (i.e. one

that applies to a domain axis construct that is not spanned by the

field construct’s data) corresponds to a CF-netCDF scalar coordinate

variable.

Time¶

Constructs (including the field constructs) that represent elapsed

time have data array values that provide elapsed time since a

reference date. These constructs are identified by the presence of

“reference time” units. The data values may be converted into the

date-time objects of the cftime package with the datetime_array

attribute of the construct, or its cf.Data instance.

>>> time = q.construct('time')

>>> time

<CF DimensionCoordinate: time(1) days since 2018-12-01 >

>>> time.get_property('units')

'days since 2018-12-01'

>>> time.get_property('calendar', default='standard')

'standard'

>>> print(time.array)

[31.]

>>> print(time.datetime_array)

[cftime.DatetimeGregorian(2019-01-01 00:00:00)]

Time duration¶

A period of time may stored in a cf.TimeDuration object. For many

applications, a cf.Data instance with appropriate units (such as

seconds) is equivalent, but a cf.TimeDuration instance also

allows units of calendar years or months; and may be relative to a

date-time offset.

>>> cm = cf.TimeDuration(1, 'calendar_month', day=16, hour=12)

>>> cm

<CF TimeDuration: P1M (Y-M-16 12:00:00)>

cf.TimeDuration objects support comparison and arithmetic operations

with numeric scalars, cf.Data instances and date-time objects:

>>> cf.dt(2000, 2, 1) + cm

cftime.datetime(2000-03-01 00:00:00)

>>> cf.Data([1, 2, 3], 'days since 2000-02-01') + cm

<CF Data(3): [2000-03-02 00:00:00, 2000-03-03 00:00:00, 2000-03-04 00:00:00] gregorian>

Date-time ranges that span the time duration can also be created:

>>> cm.interval(cf.dt(2000, 2, 1))

(cftime.DatetimeGregorian(2000-02-01 00:00:000),

cftime.DatetimeGregorian(2000-03-01 00:00:000))

>>> cm.bounds(cf.dt(2000, 2, 1))

(cftime.DatetimeGregorian(2000-01-16 12:00:00),

cftime.DatetimeGregorian(2000-02-16 12:00:00))

Time duration constructors¶

For convenience, cf.TtimeDuration instances can be created with the

following constructors:

Constructor |

Description |

|---|---|

A |

|

A |

|

A |

|

A |

|

A |

|

A |

>>> cf.D()

<CF TimeDuration: P1D (Y-M-D 00:00:00)>

>>> cf.Y(10, month=12)

<CF TimeDuration: P10Y (Y-12-01 00:00:00)>

----

Domain¶

The domain of the CF data model is not a

construct, but is defined collectively by various other metadata

constructs included in the field construct. It is represented by the

cf.Domain class. The domain instance may be accessed with the

domain attribute, or get_domain method, of the field

construct.

>>> domain = t.domain

>>> domain

<CF Domain: {1, 1, 9, 10}>

>>> print(domain)

Dimension coords: atmosphere_hybrid_height_coordinate(1) = [1.5]

: grid_latitude(10) = [2.2, ..., -1.76] degrees

: grid_longitude(9) = [-4.7, ..., -1.18] degrees

: time(1) = [2019-01-01 00:00:00]

Auxiliary coords: latitude(grid_latitude(10), grid_longitude(9)) = [[53.941, ..., 50.225]] degrees_N

: longitude(grid_longitude(9), grid_latitude(10)) = [[2.004, ..., 8.156]] degrees_E

: long_name=Grid latitude name(grid_latitude(10)) = [--, ..., 'kappa']

Cell measures : measure:area(grid_longitude(9), grid_latitude(10)) = [[2391.9657, ..., 2392.6009]] km2

Coord references: grid_mapping_name:rotated_latitude_longitude

: standard_name:atmosphere_hybrid_height_coordinate

Domain ancils : ncvar%a(atmosphere_hybrid_height_coordinate(1)) = [10.0] m

: ncvar%b(atmosphere_hybrid_height_coordinate(1)) = [20.0]

: surface_altitude(grid_latitude(10), grid_longitude(9)) = [[0.0, ..., 270.0]] m

>>> description = domain.dump(display=False)

Changes to domain instance are seen by the field construct, and vice versa. This is because the domain instance is merely a “view” of the relevant metadata constructs contained in the field construct.

>>> domain_latitude = t.domain.constructs('latitude').value()

>>> field_latitude = t.constructs('latitude').value()

>>> domain_latitude.set_property('test', 'set by domain')

>>> print(field_latitude.get_property('test'))

set by domain

>>> field_latitude.set_property('test', 'set by field')

>>> print(domain_latitude.get_property('test'))

set by field

>>> domain_latitude.del_property('test')

'set by field'

>>> field_latitude.has_property('test')

False

All of the methods and attributes related to the domain are listed here.

Metadata construct types¶

Domain axes¶

A domain axis metadata construct specifies the number of points along

an independent axis of the field construct’s domain and is stored in a

cf.DomainAxis instance. The size of the axis is retrieved with

the get_size method of the domain axis construct.

>>> print(q.domain_axes)

Constructs:

{'domainaxis0': <CF DomainAxis: size(5)>,

'domainaxis1': <CF DomainAxis: size(8)>,

'domainaxis2': <CF DomainAxis: size(1)>}

>>> d = q.domain_axes.get('domainaxis1')

>>> d

<CF DomainAxis: size(8)>

>>> d.get_size()

8

Coordinates¶

There are two types of coordinate construct, dimension and auxiliary

coordinate constructs, which can be retrieved together with the

coordinates method of the field construct, as well as

individually with the auxiliary_coordinates and

dimension_coordinates methods.

>>> print(t.coordinates)

Constructs:

{'auxiliarycoordinate0': <CF AuxiliaryCoordinate: latitude(10, 9) degrees_N>,

'auxiliarycoordinate1': <CF AuxiliaryCoordinate: longitude(9, 10) degrees_E>,

'auxiliarycoordinate2': <CF AuxiliaryCoordinate: long_name=Grid latitude name(10) >,

'dimensioncoordinate0': <CF DimensionCoordinate: atmosphere_hybrid_height_coordinate(1) >,

'dimensioncoordinate1': <CF DimensionCoordinate: grid_latitude(10) degrees>,

'dimensioncoordinate2': <CF DimensionCoordinate: grid_longitude(9) degrees>,

'dimensioncoordinate3': <CF DimensionCoordinate: time(1) days since 2018-12-01 >}

Bounds¶

A coordinate construct may contain an array of cell bounds that

provides the extent of each cell by defining the locations of the cell

vertices. This is in addition to the main data array that contains a

grid point location for each cell. The cell bounds are stored in a

cf.Bounds class instance that is accessed with the

bounds attribute, or get_bounds method, of

the coordinate construct.

A cf.Bounds instance shares the the same API as the field

construct for accessing its data.

>>> lon = t.constructs('grid_longitude').value()

>>> bounds = lon.bounds

>>> bounds

<CF Bounds: grid_longitude(9, 2) degrees>

>>> bounds.data

<CF Data(9, 2): [[-4.92, ..., -0.96]] degrees>

>>> print(bounds.array)

[[-4.92 -4.48]

[-4.48 -4.04]

[-4.04 -3.6 ]

[-3.6 -3.16]

[-3.16 -2.72]

[-2.72 -2.28]

[-2.28 -1.84]

[-1.84 -1.4 ]

[-1.4 -0.96]]

The cf.Bounds instance inherits the descriptive properties from its

parent coordinate construct, but it may also have its own properties

(although setting these is not recommended).

>>> bounds.inherited_properties()

{'standard_name': 'grid_longitude',

'units': 'degrees'}

>>> bounds.properties()

{'units': 'degrees'}

Geometry cells¶

For many geospatial applications, cell bounds can not be represented by a simple line or polygon, and different cells may have different numbers of nodes describing their bounds. For example, if each cell describes the areal extent of a watershed, then it is likely that some watersheds will require more nodes than others. Such cells are called geometries.

If a coordinate construct represents geometries then it will have a

“geometry” attribute (not a CF property) with one of the values 'point',

‘line' or 'polygon'.

This is illustrated with the file geometry.nc (found in the

sample datasets):

>>> f = cf.read('geometry.nc')[0]

>>> print(f)

Field: preciptitation_amount (ncvar%pr)

---------------------------------------

Data : preciptitation_amount(cf_role=timeseries_id(2), time(4))

Dimension coords: time(4) = [2000-01-02 00:00:00, ..., 2000-01-05 00:00:00]

Auxiliary coords: latitude(cf_role=timeseries_id(2)) = [25.0, 7.0] degrees_north

: longitude(cf_role=timeseries_id(2)) = [10.0, 40.0] degrees_east

: altitude(cf_role=timeseries_id(2)) = [5000.0, 20.0] m

: cf_role=timeseries_id(cf_role=timeseries_id(2)) = [b'x1', b'y2']

Coord references: grid_mapping_name:latitude_longitude

>>> lon = f.auxiliary_coordinate('X')

>>> lon.dump()

Auxiliary coordinate: longitude

standard_name = 'longitude'

units = 'degrees_east'

Data(2) = [10.0, 40.0] degrees_east

Geometry: polygon

Bounds:axis = 'X'

Bounds:standard_name = 'longitude'

Bounds:units = 'degrees_east'

Bounds:Data(2, 3, 4) = [[[20.0, ..., --]]] degrees_east

Interior Ring:Data(2, 3) = [[0, ..., --]]

>>> lon.get_geometry()

'polygon'

Bounds for geometry cells are also stored in a Bounds instance, but

one that always has two extra trailing dimensions (rather than

one). The fist trailing dimension indexes the distinct parts of a